Cognitive AI

This folder is intended to be developed to support the collection of considerations and inform the requirements analysis of what is required to support Cognative AI Agents in a manner that supports the HumanCentricAI requirements specifically.

Whilst others may not consider this to be specifically about CognativeAI, its the best term i've found so far, to use for the general field of endevour.

Applied Theory: Notes re: Webizen

As is otherwise noted in the document about SafetyConsiderations, the use and development of Cognitive AI systems by me and related works that are specifically related to me; and the advancement of my works by others, are sought to be defined in a way that advances the tooling required to support HumanCentricDigitalIdentity, [[WebScience/PeaceInfrastructureProject/SafetyProtocols/HumanCentricAI]] and related [[PCT-Webizen-Notes/Webizen/EconomicSystems/Centricity]] considerations specifically. Therein - These works are not sought to be employed to deploy AI Agents that act in a NobodyAI like manner / methodology; and particular protections are sought to be forged in-order to ensure that my works are not employed for those purposes.

These objectives form constituencies to the requirements for EndingDigitalSlavery.

Further Notes about Webizen

Historical works sought to produce what i called a 'knowledge banking' ecosystem methodology; which was dependant upon the useful employment of what was in-effect a belief - that organisations would want to usefully support human agency and support the fundamental requirements necessary, in-order to do so; and in-turn, gainfully benefit via the operation of infrastructure that supported those requirements. Yet, this isn't what happened.

The commercial deployment of works, particularly those around 'Digital Identity' specifically excluded support for; what is essentially about -human right, even though, the consequence of doing so engendered what is in-effect a choice to institutionally support SocialAttackVectors. Within these systems the 'agent' was intended to support the requirements for, what is now being illustrated as HumanCentricOntology.

More information about these former 'designs' is illustrated in documents like [[TheSemanticInforg&TheHumanCentricWeb — RealityCheckTech]] that have been copied into this documetation repo.

SO, whilst motified; and subject to on-going harms by people who - i consider simply, to be bad people - i went about trying to figure out how to define this ontology, which involved engaging in debate about it with leading minds world-wide via forum focused on the science of consciousness and all sorts of weird and wonderful advanced concepts therein. This then led to debates about issues that limited the means to form productive works in the field.

As is required to support FreedomofThought and [[WebScience/PeaceInfrastructureProject/SafetyProtocols/HumanCentricAI]], etc. which in-turn led to an epiphany; which was, that the issues with owl:thing goes away to some-degree, if the mission is to produce a method whereby persons are made able to 'buy' (and own) their own 'robots' (ai). By shifting from the impossible situation of having to contend with well funded adverseries who had absolutely no interest whatsoever in EndingDigitalSlavery (indeed, arguably they're seeking the opposite - whilst simultaniously seeking others to believe that they're doing so, gainfully, only because of other people); away from a persons 'digital twin', or the digital emboidment of a persons existance and all related records; towards an alternative, that was about how we define an 'ai agent' that is an emboidment of what we want to have, own and 'interact' with our lives, the situation pivoted in a fairly remarkable way. This then led to my realisation, that this is what i needed to do with the old term 'webizen' that i had formerally used, and consequentially 'saved' webizen.org to do something good with in future.

In the end; the Webizen, is the artificial intelligence agent that is designed to support the needs of its owner; and if the owner is a legal entity (ie: company, etc. group entity); then it must interact with other webizen, that are owned by the individuals (human beings) these systems (tools) are intended to support; as the conduit of responsibility for our biosphere.

In-order to make these 'robots' work; there needs to be a method to define 'core logic' and related systems requirements. The development of Cognative AI ecosystem components is critical to the realisation of those goals.

Whilst Human Beings naturally develop a comprehension about many things; including natural language,

Robots (software) is able to develop comprehension about many computational things.

The objective is to seek to produce personal and private AI Agents (webizen) that are equipped to support doing the tasks, that human beings are not best equipped to do.

In-effect, its a long-way down the track from the time where electronic caculators were introduced and made usable by students in schools, etc.

Yet presently, the use of advanced AI generally requires interactions to be performed via 3rd party systems that are - somewhat 'global' in nature; fundamentally, these systems are not 'private' and there's an array of implications that is likely to lead some people towards seeking an alternative. As part of these systems, seeking to provide an alternative, there needs to be some processing of complex logic in-order to support most linked requirements.

Description of CogAI

Cognitive artificial intelligence (AI) is a type of AI that is designed to mimic the cognitive abilities of humans, such as learning, problem-solving, decision-making, and natural language processing. It is based on the idea that the human brain is a computational system that can be emulated by a machine, and it seeks to build AI systems that can perform tasks that require human-like cognition.

Cognitive AI technologies include machine learning, natural language processing, and machine vision, which allow machines to learn from data, understand and generate human language, and perceive and understand the visual world in a way that is similar to humans. These technologies can be applied in a variety of fields, including healthcare, finance, education, and customer service, to assist humans in making decisions, providing personalized recommendations, and completing tasks more efficiently.

However, cognitive AI also raises ethical and societal concerns, such as the potential for automation to displace human workers, the need for transparency and explainability in AI decision-making, and the potential for AI to perpetuate and amplify biases present in the data used to train it. As a result, the development and use of cognitive AI requires careful consideration of these issues and the responsible design and deployment of these technologies.

UseCases

Some of the initial use-cases for CogAI will include the [[TheValuesProject]] and in-turn therein, the ability to process a multitude of [[WebScience/PeaceInfrastructureProject/SafetyProtocols/ValuesCredentials]] and in-turn provide support for Webizen Owners to evaluate whether and/or how an activity may be compliant, compatible and/or promotional of those values or whether and how there may be some sort of conflict.

Requirements

There must be an open-standards way to perform these sorts of procedural knowledge representation tasks. This will in-turn support productivity and reduce and/or clarify risks associated with behaviours and/or activities.

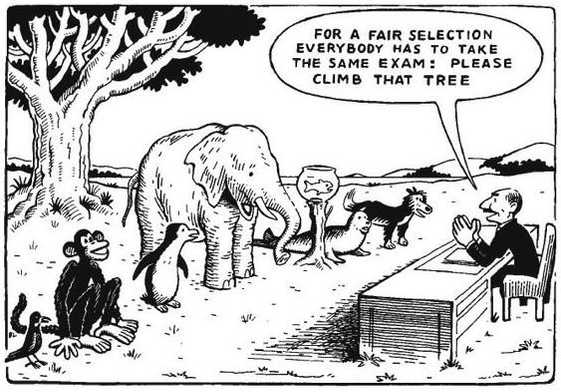

The Area has an array of very complex requirements; however fundamentally, the Web Science related requirement, is to figure out how to form a #HumanCentric Approach; so that, whilst the tools may offer suggestions and/or support, it is fundamentally still only humans that are responsible for making decisions and not Active Artificial Agents ( #AI )

W3C Cognative AI CG

Work to produce an open-standard for Cognative AI is being undertaken now in the W3C CogAI CG

"The real world is frustratingly uncertain, incomplete and inconsistent. This is challenging for traditional approaches to information systems, and a new paradigm is needed that combines symbolic and statistical techniques, building upon decades of work in the cognitive sciences, and over 500 million years of natural selection. This will allow us to create cognitive agents that learn and reason based upon prior knowledge and past experience, and which can satisfy the need for, transparency in decision making, and continuous learning for adapting to ever changing needs. This community group will address opportunities for cognitive agents using graphs, statistics, rules and graph algorithms, starting with an amalgam of RDF and Property Graphs, together with Web architecture for cognitive databases."

Source: W3C CogAI CG

Note also the GitHub link for the CogAI.

CogAI as a toolchain for Cognitive Databases

What are Cognative Databases?

Description by ChatGPT A cognitive database is a type of database that can understand natural language queries and extract meaning from unstructured data. It uses techniques from artificial intelligence and natural language processing to analyze and understand the data stored within the database, making it more easily accessible to users. This allows for more efficient and accurate data retrieval, as well as the ability to perform more complex queries and analysis.

Cognitive databases can also learn and adapt over time, by analyzing user interactions and feedback to improve the accuracy of its analysis and responses. Additionally, they can provide insights and recommendations based on the data they have analyzed, and can even generate natural language responses to user queries.

Cognitive databases are increasingly being used in a variety of industries, including healthcare, finance, and retail, to help organizations better understand and make use of the large amounts of data they collect. They can also be used to improve customer service by allowing customers to interact with the database using natural language, rather than having to learn a specific query language.

It's important to note that cognitive databases are still relatively new and there is ongoing research to improve their capabilities and make them more widely adopted.

My Question: What is the datastructure for a cognative database?

The data structure for a cognitive database can vary depending on the specific implementation and the type of data being stored. However, some common elements include:

- A structured data layer: This layer typically stores structured data in tables or columns, similar to a traditional relational database.

- An unstructured data layer: This layer is used to store unstructured data such as text, images, and audio. It can be stored in various forms such as raw text, XML, JSON and etc.

- A semantic layer: This layer is used to extract meaning from the unstructured data and provide a way to query it using natural language.

- A machine learning layer: This layer can be used to train machine learning models to understand the data and improve the accuracy of the database's analysis and responses.

- A knowledge graph: This layer is used to store the relationships between data entities and make it easy to traverse the data.

- A natural language processing (NLP) layer: This layer is used to analyze and understand natural language text, such as that found in user queries or unstructured data. It can be used to extract entities, sentiment, and intent from text and make it more easily searchable and understandable.

- A reasoning layer: This layer can be used to reason over the data and make inferences based on it. It can help the database understand the context of a query and provide more accurate responses.

- An ontology layer: This layer can be used to represent the concepts and relationships in the data using a formal ontology. This can be used to help the database understand the meaning of the data and make it more easily searchable.

- A visualization layer: This layer can be used to display the data in a meaningful way, such as through charts, graphs, or maps. This can make it easier for users to understand and interact with the data.

It's important to note that the specific data structure of a cognitive database will depend on the particular implementation and the use case. The key feature is that it can understand and extract meaning from unstructured data, making it more easily accessible to users.

It's worth noting that not all cognitive databases will include all of these elements and the specific data structure may vary depending on the application and the specific use case.

NOTES:

Below are notes that are relevant for the work described in the PCTOntologyModelling page (currently) about how to form a method for top-level ontological structures.