Vocabulary Modelling

NOTE: Related works are located in the PCTOntologyModelling folder. This is intended to be more technical, as i'm working on some sort of implementation method.

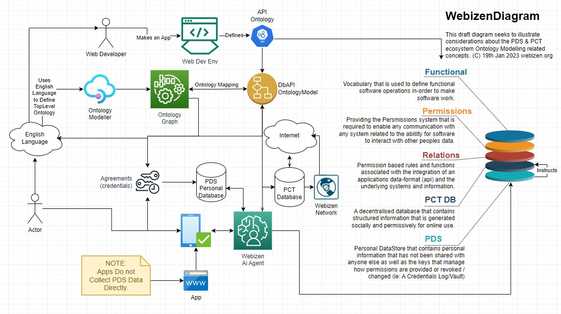

The Diagram above make an attempt to provide basic summary overview of how ontologies (vocabulary / languages) are used by these systems to define 'semantics'.

Human Users

The focus for Human Users presently is English (focus therein - AustralianEnglish, as well as other examples of it) as that's the only natural spoken / written language i am competent in. Phonetics, tonality and other factors play a role with spoken speech; punctuation and grammar plays a role with written natural language alongside spelling.

Courts use natural langague to process disputes, form common-sense and defined outcomes. Further considerations are being made in the [[EnglishLanguageModelling]] document. This is in-turn not simply about english, but moreover about the broader series of considerations associted with trying to figure out how to define a solution for this component in a BestEfforts and FitForPurpose manner.

Computational Interference

There are many negative implications that develop when even basic AI related processes (ie: spellcheck helpers) re:define a term to another term, that changes the meaning of the sentence or concept that was intended to be communicated by the human being.

These sorts of events create logs; and, complicate assessments - noting - i am not sure of a command that is .wellknown that allows a human being to assert a correction for an action that was made by a machine; or, any existing syntax that ensures a machines interference with a persons words is able to be logged, as part of that data-file.

Software Agents

Software agents fundamentally review english text on a computational basis - ending up with processes parsed in binary.

However; there are various well-defined command structures that are in-effect a machine vocabulary that is contextual to the software / hardware environment.

an instruction set, in-effect...

Human Centric Software Agent Considerations

Therein - one of the objective SafetyProtocols related considerations should include the implications of computer generated deterministic content or artifacts interfering with AI modelling techniques - due to AI related inputs that are not properly labelled.

The example being - 'autocorrect' issues... changing the meaning of a sentence, and thereafter being employed to determine attributes about the natural agent.

Natural Language Modelling

The language - english - has evolved over more than 1000 years, which makes it a fairly new language in the scheme of things; yet, the formation of it - has involved the use of many other terms and words that were used by various people who spoke various langauges at various times, in relation to various places.

Some investigation into related considerations can be found via the ChatGPTDynamicOntology and AChatGPTExperimentHolborn chatlogs; and overall the process of gaining the volume of insights considered usefully important, is still a work in progress. Indeed; there's an array of advanced concepts, that i think - that its unlikely i'm ever the expert in - so, whilst BestEfforts are being made - the deliberations is, about seeking to ensure the foundational constructs support the SocialFactors and particularly therein; any SafetyProtocols (whether noted or yet to be noted) that may be of instrumental importance, as to support the broader [[TheValuesProject]] related implicit and explicit requirements.

Anedotally, it appears to me that these sorts of problems - seemingly haven't been formerally addressed in a FitForPurpose way - which is unlikely to be due to some form of negligence in relation to the scientists involved at the time (although - SocialAttackVectors are very likely to have played a role with the consequential application of technology); rather, it is more likely that the technology of the time was unable to do the sorts of things that might otherwise be done, and perhaps sacrifices were made; and then built upon by others, using old work as foundational components for new works.

In-effect, the implication relates to the concept of TechDebt although - not as is mentioned currently by that article. There is a requirement to seek to ensure, that it is human beings who maintain primary responsibility for what it is they do and the effect of what they say. AI models can be used to pervert and interfere in those sorts of underlying 'caculations' in ways that can lead to unwanted and/or unintended consequences; that in-turn relates to broader issues such as those noted about NobodyAI.

So, the design implication; with respect to the broader and future objectives of the webizen ecosystem - in relation to forming a semantic vocabulary method and apparatus (program),

Whilst my study is not exhaustive - noting people spend their entire lives studing these sorts of fields - which is not what i have done or do...

it appears to me that the roots of english end-up forming a fabric, that likely related to trade and the movement of people (including conflicts) that results in considerations that are temporally geospatial in nature; which thereafter become influenced by the politics and ideologies associated with groups over the course of space-time stuff, in-effect...

The ability to produce a 'system of excellence' is likely to require an enormous amount of computational capability; and in-turn, be forged through the use of known languages, over the course of at least the last 1500 years; which is a relatively simple task by comparison to many older languages, yet nonetheless - all somewhat 'linked together'...

These models would need to be defined in a way that supported continual training / learning.

Yet, this is unlikely to provide a solution that is able to be made able to work on the sorts of devices commonly used by people - such as mobile phones and personal computers..

It is essential to ensure support for a technique that enables the private use of language, in a manner that can result with artifacts that are comprehensble to other Webizen Owners / users (the human beings - and in-turn, group entities - like companies, etc.).

SO therefore; a high-level model is required that can support extensibility; whilst also, providing something that can be used as a basic component that requires relatively little computational resources on the device that it is functioning on. This is demonstrated by existing dictionary software that has existed over a very long period of time; but, the desired functionality of this component, is considered to be desirably defined to be far more sophistocated.

Fundamentally also: the objective is not to simply support the english language. As i do not know other languages well enough, the only reasonable approach i have is to focus on the language i know, and hope that some of the insights and that all of the derivative 'product' output; is useful, for those who are better equipped to look at how to implement other languages. In many ways, this is a sort of moral hygiene consideration; where the preference is, not to define others via a field of work / study / knowledge, that i do not know; which is intended to be intepreted as an attitude relating to care and consideration, rather than the opposite.